Tracing the journey from ancient calculation tools to today’s digital marvels

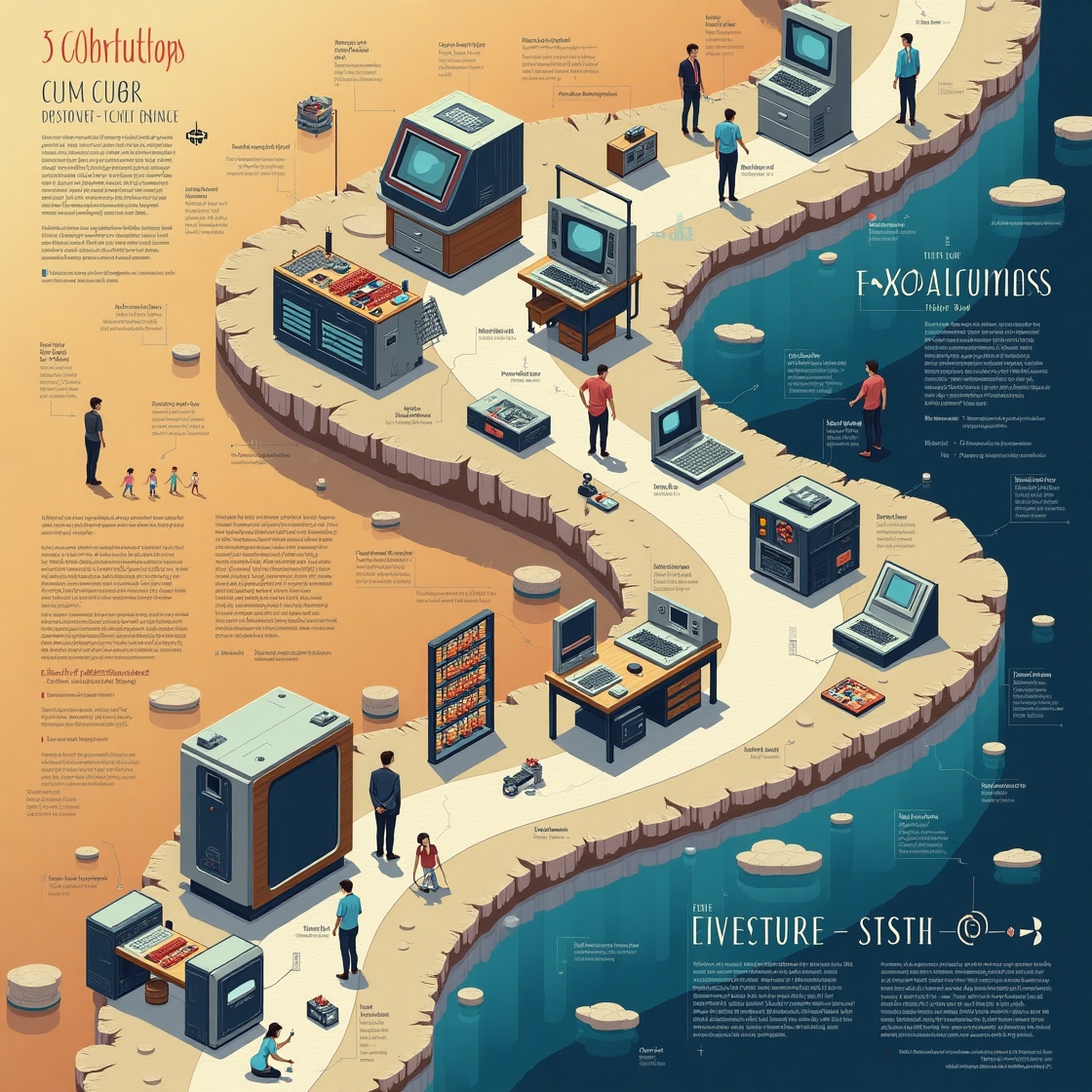

The Evolution of Computing

Tracing the journey from ancient calculation tools to today’s digital marvels

Introduction

Computers are integral to modern life, yet their origins date back thousands of years. This blog explores how the idea of computing was conceived, the milestones in its development, and the pioneers who shaped technology as we know it.

The First Visionary: Charles Babbage

Charles Babbage (1791–1871), an English mathematician, engineer, and polymath, is often credited as the first person to conceptualize the modern computer. Frustrated by the tedious and error-prone process of hand-calculating mathematical tables, Babbage envisioned a mechanical device—the Difference Engine—to automate calculations. Later, his design evolved into the more advanced Analytical Engine, featuring components analogous to a modern CPU, memory, and input/output systems.

Timeline of Key Developments

- c. 2400 BCE: The Abacus emerges in Mesopotamia—one of the earliest tools for arithmetic.

- 1642: Blaise Pascal builds the Pascaline, a mechanical adding machine.

- 1673: Gottfried Wilhelm Leibniz invents the Stepped Reckoner, capable of addition, subtraction, multiplication, and division.

- 1822–1837: Charles Babbage develops the Difference Engine and designs the Analytical Engine—the first mechanical computer concept.

- 1843: Ada Lovelace publishes the first algorithm intended for Babbage’s Analytical Engine, earning her recognition as the world’s first computer programmer.

- 1937: Alan Turing introduces the concept of the Turing Machine, laying theoretical groundwork for computation and algorithms.

- 1940s: Electronic computers like the Atanasoff–Berry in 1939 and ENIAC in 1945 bring computative power to life.

- 1956: The first hard disk drive is unveiled by IBM, marking the beginning of magnetic storage.

- 1971: Intel releases the 4004, the first commercially available microprocessor.

- 1981: IBM launches the first Personal Computer (PC), revolutionizing accessibility.

- 1991–2000s: The rise of the Internet, graphical interfaces, and mobile computing transform how we interact with machines.

- 2010s–Present: Cloud computing, AI, and quantum research push the boundaries of what computers can achieve.

Why the Idea Emerged

The concept of an automated computing device arose to solve practical challenges: eliminating human error in calculations, accelerating complex mathematical tasks, and managing burgeoning data. Innovators like Babbage and Turing understood that machines could enhance human capability by following precise instructions, leading to breakthroughs that underpin today’s digital world.

Conclusion

From the humble abacus to the sophisticated processors in our smartphones, the journey of computing reflects humanity’s quest for efficiency, accuracy, and innovation. As we look ahead, emerging technologies such as quantum computing, neuromorphic chips, and advanced AI promise to redefine computation once more.

ComputerHistory #TechEvolution #CharlesBabbage #AdaLovelace #HistoryOfComputing #TuringMachine #InventionOfComputers #TechnologyTimeline #ModernComputing #FromAbacusToAI #InnovationJourney #TechBlog #SmartChoiceLinks

Leave a Reply